I did gamut plots again but with just the ColorChecker portion. Also, I added in the CC chart in Lab format from

Dry Creek Photo. Here is the Dry Creek version (with labels of Lab values as read from Photoshop, when you open the Lab version from DCP, not the sRGB version I'm showing here.)

The gamut looks like this in 2D (done from the Lab version of the DCP image with no labels.)

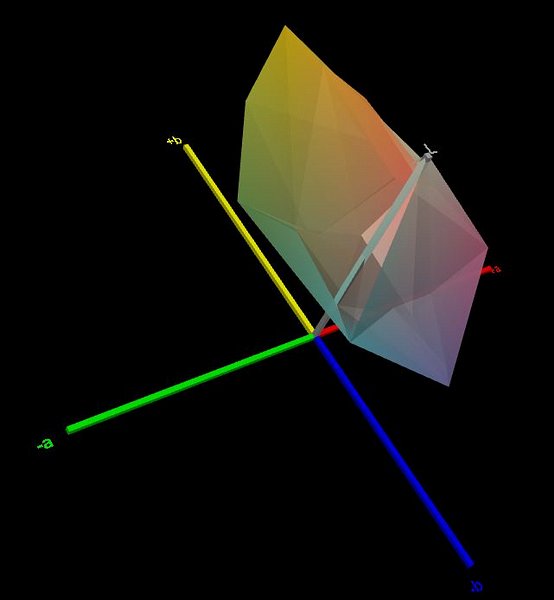

3D plot

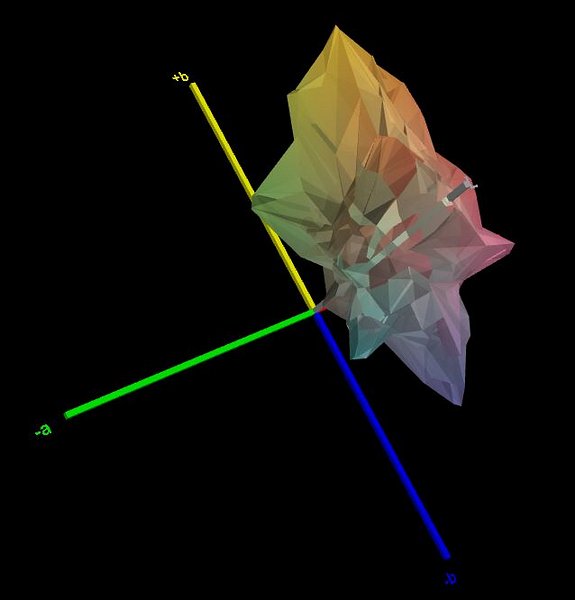

3D plot. Smoother (DCP's is an average. Or maybe was CGIed?)

Here is the CC portion of my set image shot in real daylight after I used Photoshop's Perspective Crop to crop out just the CC chart.

Differences in processing: I opened the images up in Lab mode in ACR but this time I switched the profile from "Adobe Color" to "Adobe Standard". And white balanced on the 2nd gray chip (instead of on the WhiBal card.) The Dry Creek Photo chip was 81,-1,0. I adjusted exposure in ACR so this chip was 81,0,0 (The ColorCheck Passport card's bottom row

isn't really neutral according to Robin Myers, which is why I originally used my WhiBal card.)

Here is the 2D plot of the gamut of my CC card shot in daylight.

3D plot.

3D plot.And here is a 2D plot of the gamut of my CC card shot in daylight against the gamut of the Dry Creek Photo CC card.

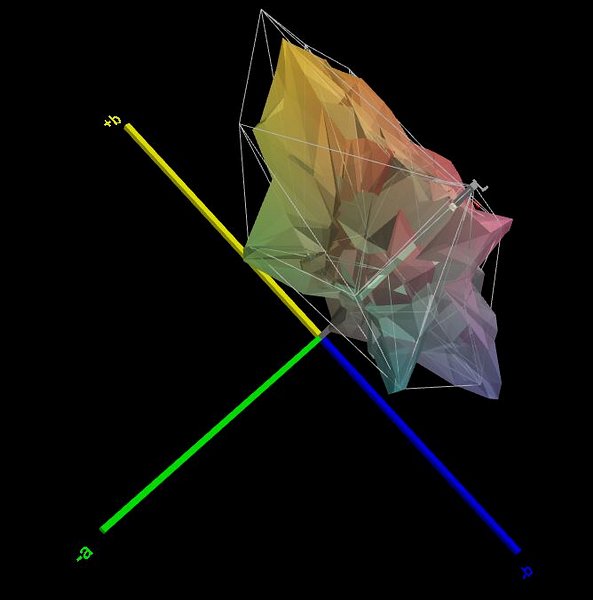

3D plot.

3D plot.The gamut of the DCP card doesn't go as close to black as the gamuts of my CC card, presumably because the surround of the DCP card is gray while the surround of my ColorChecker Passport card is closer to black.

I don't know why the gamut of the DCP card is larger than my own CC card shots. Possibly because of different raw converters? Or because DCP used a different camera profile than Adobe Standard? All of my CC card shots were processed the same (opened and saved in Lab, the using Adobe Standard profile.) Or (after rereading the DCP page), maybe the DCP chart is synthetic and was created entirely in Photoshop; and wasn't shot from a camera at all.

Here is an updated page of

my 3D gamut plots showing with all the illuminants I used before (Walmart, Cree, Aperture, real daylight) in different permutations. With the DCP card mixed in.

When looking at the table sorted by Cubic Colorspace Units at the bottom of the above page I see that the gamut plots I did earlier of the entire sets are a lot larger than the gamut of just the CC card. Maybe because the real world objects in my set are more saturated than the CC card? Or maybe they are just different hues, so there are more samples?

Is any of this showing us anything about color rendering under different illuminants? Or am I just making colorful Rorschach tests?